Chapter 2: Calculation Era (1936-1955) : Global Origins of Machine Intelligence

2.1 Parallel Computational Visions

The conventional narrative presents AI's origins through a handful of Western pioneers—Turing's theoretical insights, ENIAC's computational power, Shannon's information theory. While these contributions were indeed foundational, this singular focus obscures the global, parallel development of computational intelligence that characterized the pre-digital era.

Theoretical Foundations Across Continents

Alan Turing's 1936 paper "On Computable Numbers" established the theoretical foundation for mechanical computation, introducing what we now call Turing machines [Core Claim: Turing 1936]. Yet this breakthrough emerged alongside complementary insights worldwide. In Germany, Konrad Zuse was developing the world's first programmable computer, the Z3, completed in 1941 for aircraft wing flutter calculations [Core Claim: Zuse 1993]. Unlike ENIAC's decimal system, Zuse's binary approach proved more aligned with modern computing architectures.

Meanwhile, Soviet mathematician Andrei Kolmogorov was laying groundwork for cybernetics through his work on stochastic processes and information theory, providing mathematical frameworks that would later prove crucial for machine learning [Context Claim: Gerovitch 2002]. These parallel developments suggest that computational intelligence emerged from fundamental mathematical insights that transcended national boundaries.

Hardware Realizations: A Global Perspective

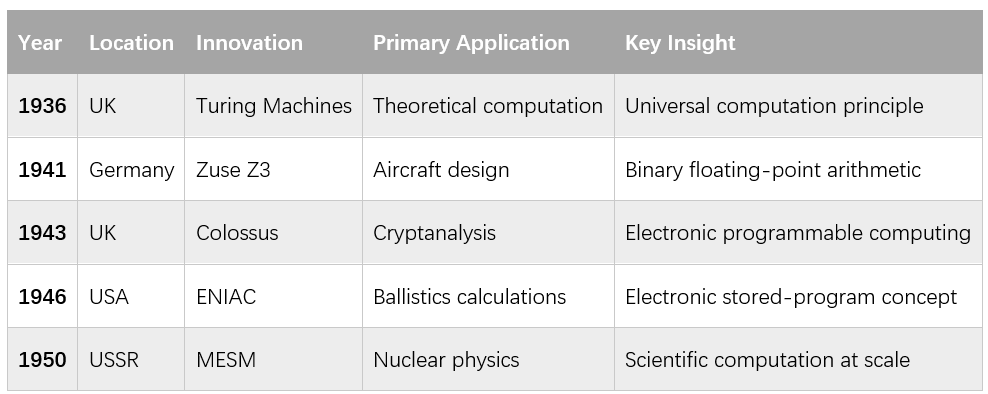

The table below illustrates the remarkable synchronicity of computational developments across different continents:

Each system addressed specific national priorities while advancing universal principles of computation. Colossus, developed at Bletchley Park for breaking the Lorenz cipher, processed data at unprecedented speeds while remaining classified for decades [Core Claim: UK National Archives]. ENIAC's ballistics calculations represented the transition from human computers to electronic computation, achieving in 30 seconds what required 20 hours of manual calculation [Core Claim: Goldstine 1946].

Capability Boundaries of Early Systems

These early machines excelled at precisely defined computational tasks while revealing fundamental limitations that would shape AI development for decades:

What They Could Do:

- Perform complex mathematical calculations with unprecedented speed

- Store and manipulate large quantities of numerical data

- Execute programmed sequences of operations reliably

- Solve differential equations for scientific and military applications

What They Couldn't Do:

- - Adapt their behavior based on experience

- - Generalize solutions from one problem domain to another

- - Recognize patterns in sensory data

- - Learn from mistakes or improve performance over time

This capability gap—between computational power and adaptive intelligence—would define the central challenge of artificial intelligence for the following decades [Interpretive Claim].

2.2 The Learning Breakthrough

Beyond Calculation: The Emergence of Adaptive Systems

While early computers excelled at predetermined calculations, the breakthrough toward genuine artificial intelligence required systems that could improve their own performance. Two pioneering efforts in the early 1950s demonstrated that machines could transcend their initial programming.

Claude Shannon's 1950 chess program represented the first systematic attempt to encode strategic thinking in computational form [Core Claim: Shannon 1950]. The program evaluated chess positions using weighted features like material balance and piece mobility, employing a minimax algorithm to select moves. Though limited to analyzing three moves ahead due to computational constraints, Shannon's work established the fundamental approach of combining evaluation functions with systematic search that would dominate game-playing AI for decades.

Arthur Samuel's checkers program, developed on the IBM 701 from 1952 onwards, achieved something qualitatively different: genuine learning from experience [Core Claim: Samuel 1959]. Samuel's innovation lay not just in programming checkers knowledge, but in creating a system that could modify its own evaluation function based on game outcomes.

The Mechanics of Machine Learning

Samuel's approach involved several key innovations that established foundational principles for machine learning:

- Self-Play Training: The program played thousands of games against itself, using outcomes to adjust the weights assigned to different board features

- Feature Learning: Rather than relying solely on programmer intuition, the system discovered which board characteristics best predicted victory

- Parameter Optimization: Mathematical techniques automatically tuned the relative importance of different strategic factors

The results were remarkable: within a few years, Samuel's program consistently defeated its creator and achieved expert-level play [Core Claim: Samuel 1959]. This represented the first documented case of a machine exceeding its programmer's capability in a cognitive task.

Why Games Mattered: Perfect Information Environments

Games provided ideal testing grounds for early AI systems because they offered what researchers call "perfect information" environments:

- Clear Objective Functions: Winning provides unambiguous success criteria

- Controlled Complexity: Rule-based systems with finite state spaces

- Unlimited Practice Opportunities: Machines could play thousands of games for training

- Measurable Progress: Performance improvements could be quantified objectively

This established a pattern that continues today: games serve as AI's proving ground because they combine intellectual challenge with measurable outcomes [Interpretive Claim]. From Samuel's checkers to AlphaGo's Go mastery, games have consistently revealed the current boundaries of machine intelligence.

2.3 Global Foundations Timeline

Concurrent Innovations Across Continents

The development of computational intelligence was truly global, with parallel innovations emerging across different technological and cultural contexts:

European Innovations

European efforts focused heavily on cryptography and scientific computation. Colossus, operating in secret at Bletchley Park, demonstrated that electronic machines could process symbolic information at unprecedented speeds. Tommy Flowers' engineering achievement required 1,500 electronic valves and could analyze German diplomatic traffic in near real-time [Core Claim: UK National Archives].

Soviet Cybernetics

Soviet researchers approached computation through the lens of cybernetics—the study of control and communication in animals and machines. Sergei Lebedev's MESM (Малая Электронно-Счетная Машина), completed in 1950, performed nuclear physics calculations for the Soviet atomic program [Core Claim: Kiev Institute of Cybernetics Archives]. More significantly, Soviet cybernetics emphasized feedback loops and self-regulation, concepts that would prove crucial for later developments in machine learning.

Kolmogorov's mathematical work on probability and information theory provided theoretical foundations that transcended ideological boundaries. His contributions to algorithmic information theory and stochastic processes would later enable statistical approaches to machine learning [Context Claim: Li & Vitányi 1997].

The Paradox of Constraints

Remarkably, the severe hardware limitations of this era paradoxically drove innovation rather than hindering it:

- Memory Constraints: The IBM 701's 2,048-word memory forced Samuel to develop extraordinarily efficient algorithms that remain elegant by modern standards

- Processing Limitations: Slow computation speeds necessitated clever heuristics and approximation methods

- Programming Difficulty: Punch-card programming required such precision that it cultivated rigorous algorithmic thinking

These constraints forced researchers to understand fundamental principles rather than relying on brute-force approaches [Interpretive Claim]. The algorithmic elegance born from necessity would influence computing for decades.

2.4 Early Societal Implications

Military Origins and Ethical Questions

From its inception, computational intelligence was intertwined with questions of warfare and human agency. ENIAC's primary mission—calculating artillery firing tables—immediately raised questions about automated decision-making in life-and-death situations [Interpretive Claim]. While these early systems required human oversight for all decisions, they established precedents for machine-assisted military planning that continue to shape contemporary debates over autonomous weapons.

Scientific Revolution

The transformation of scientific practice proved equally profound. Meteorologists could suddenly simulate weather patterns, predicting storms days in advance rather than merely observing current conditions. Nuclear physicists used Monte Carlo methods to model atomic behavior, accelerating weapons development and peaceful applications alike [Context Claim: Metropolis & Ulam 1949].

Human-Machine Collaboration Patterns

The relationship between humans and early computers established patterns that persist today. ENIAC's operation required teams of skilled technicians—predominantly women—who managed the complex process of programming and data entry [Core Claim: Light 1999]. These "human computers" didn't simply operate machines; they negotiated the boundaries between human intuition and mechanical precision, developing the first protocols for human-AI collaboration.

Global Competition and Knowledge Sharing

Despite political tensions, computational innovations crossed national boundaries through scientific publications and academic exchanges. The fundamental insights about computation, feedback, and information processing transcended ideological divisions, suggesting that artificial intelligence would develop as a global scientific endeavor rather than a purely national enterprise [Interpretive Claim].

The foundations laid during this era—from Turing's theoretical insights to Samuel's learning algorithms—established both the promise and the persistent challenges of artificial intelligence. These early systems demonstrated that machines could exceed human capabilities in specific domains while revealing the enormous gap between computational power and general intelligence. Understanding this foundational period illuminates why subsequent AI development would follow cycles of optimism and disappointment, as researchers repeatedly discovered that intelligence proves far more complex than initially imagined.

ns216.73.216.239da2